Do You Have to Write a Review if You Check in on Yelp

Business owners are ofttimes frustrated to find positive reviews from their customers filtered out by Yelp. Why does this happen, and what tin can be washed about information technology?

If yous're a concern owner or manager, there'due south a decent gamble that y'all've spent some time obsessing over your Yelp reviews. (If you're not paying attention to your reviews, you lot should be—digital PR is a pretty pregnant facet of Internet marketing.) And if that's the example, then you've probably fumed over every positive review that's been condemned to the purgatory known every bit Yelp's "not currently recommended" department. And yous're probably assuming that this is considering you're not paying for Yelp's PPC services. Permit'southward observe out if that'south true or not.

For those who aren't aware, if you lot scroll down to the bottom of a concern'southward Yelp page, you'll see light gray text that says "X other reviews that are not currently recommended."

"Not recommended reviews" are reviews that take been filtered out by Yelp and not counted.

If a Yelp visitor chooses to dig deep and read them, they can. Merely these reviews are hard to find, and they don't contribute to the business's Yelp rating or review count.

According to Yelp, their algorithm chooses to not recommend certain reviews considering information technology's believed that the flagged review is fake, unhelpful, or biased. However, some reviews are filtered simply because the reviewer is inexperienced, not attuned to the tastes of most of Yelp's users, or other concerns that have null to practise with whether the Yelp users' recounting of their experience is accurate. Yelp says that roughly 25% of all user reviews are not recommended past the algorithm.

While Yelp at least admits that reviews may be filtered out simply because the reviewer isn't a frequent Yelp user, in that location's still a lot that'south unclear. That's a problem, because the vagueness of this procedure could provide sufficient cover to conceal bias or unethical beliefs on Yelp'south part.

What really determines whether a review is flagged by Yelp'south filtering algorithm?

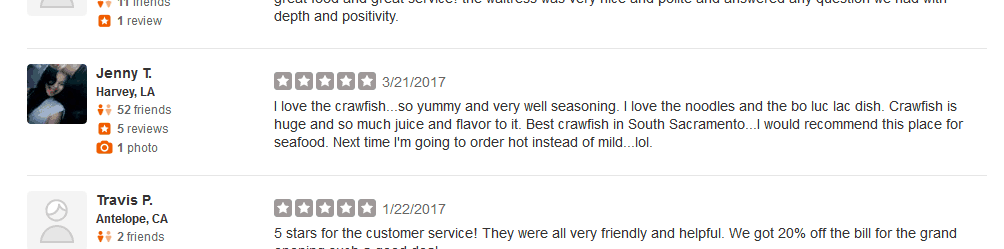

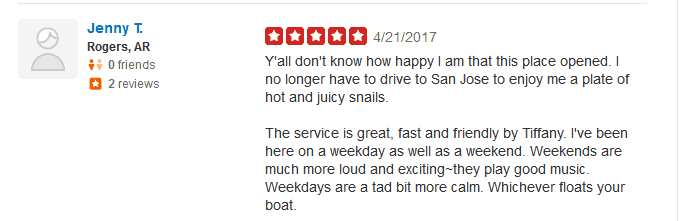

If you accept some time to gyre through a few Yelp pages, yous'll see reviews left by people who have written i review and don't fifty-fifty have a profile paradigm, while reviews from more established Yelp users stop upwardly in the dustbin.

Why is the review above written by a user with 52 friends and 5 reviews filtered out, while the one below makes the cut?

I've had to deal with Yelp issues when working with clients, and have written well-nigh Yelp in the past (encounter my article on "Dealing With Fake Reviews on Yelp"). In contemplating the many frustrating issues with Yelp, I've long wondered if information technology would be possible to determine whether a given review would be more or less likely to be filtered out by Yelp'south algorithm.

The core of that question is, what does Yelp consider to be the critical components of a review'south trustworthiness? I decided to try and find out.

What Yelp doesn't desire you lot to know, and for good reason.

At that place is a key limiting factor in whatsoever analysis of visible versus filtered reviews—y'all cannot look at the user profile of someone whose review is non recommended. It's not clickable. So whatever comparison of the 2 classes of reviews can't incorporate in-depth contour data—time spent on Yelp, their "Things I Love" listing, whether a person has chosen a custom URL for their Yelp profile, etc.

At that place's a reason for that: Yelp doesn't desire people to know how their algorithm works. If we knew exactly how it worked, then we could game it. This significantly hampers the power of an outsider to penetrate the machinations of Yelp's algorithm.

Withal, there are a few things of which we're pretty certain, but which we can't analyze in a meaningful way:

Get-go, do not ask customers to write a Yelp review while they're at your business organization.

Yelp'south site and mobile telephone application can easily determine your physical location when y'all submit a review. If you submit a review while you're at the business's location, Yelp volition exist able to tell, and it'southward extremely likely that they'll filter the review. A good way to get around this is to send a follow-up email to the customer a few days later you assist them, request them how their experience was, and to leave a review for yous if their feel was positive.

Don't provide customers with a direct link to your Yelp page if yous're asking them to review your business.

Yelp can look at the referring domain name, and if they see that the person reviewing Doug's Fish Tank Shop was referred by dougsfishtanks.com, they'll know that the customer was specifically referred past you lot and filter the review. Instead, simply say, "Delight visit Yelp.com, await upwards our business, and get out u.s.a. a review." This eliminates the suspicious linking that would otherwise lead to the review being filtered.

The timing of reviews matters.

If y'all hold a day-long promotion during which you offer customers some sort of bargain if they go out a positive review for your business on Yelp, what Yelp is going to see is that a business which had previously received only a handful of reviews over several years is suddenly getting multiple reviews on the same day. That'due south going to look simply a teensy bit suspicious, and all of those reviews are going to finish up on the Isle of Misfit Reviews, along with all of the other filtered reviews. If you insist on having some sort of promotion, spread it out over time. Get in function of your standard follow-up communication, equally suggested higher up, rather than some sort of special consequence that immediately puts Yelp on high alert.

We can't attest to the in a higher place based on statistics and analysis, because Yelp keeps that data in the digital equivalent of Fort Knox. Only based on experienced, we tin can infer that the above is truthful.

As a result, my analysis is restricted to only the information that's publicly available to anyone who decides to spend a few hours crawling through Yelp with an Excel spreadsheet. Essentially, my analysis assumes that all of the reviews that I looked at were left past honest, hostage individuals who weren't coerced by business owners or acting on agenda.

So, with that supposition in mind, what determines whether a Yelp review is filtered?

Designing a data analysis of Yelp reviews.

Ultimately, I chose to focus on five review variables: Whether the review'south writer has a contour image, the number of friends they have, the number of reviews they've written, how many photos they've submitted, and the rating of the review. I opted to choose five random businesses in the local Sacramento area: a eating house, automobile repair shop, plumber, clothing shop, and golf course. I would collect this information for each and every visible and filtered review for these five businesses, and see if the comparisons fabricated anything articulate.

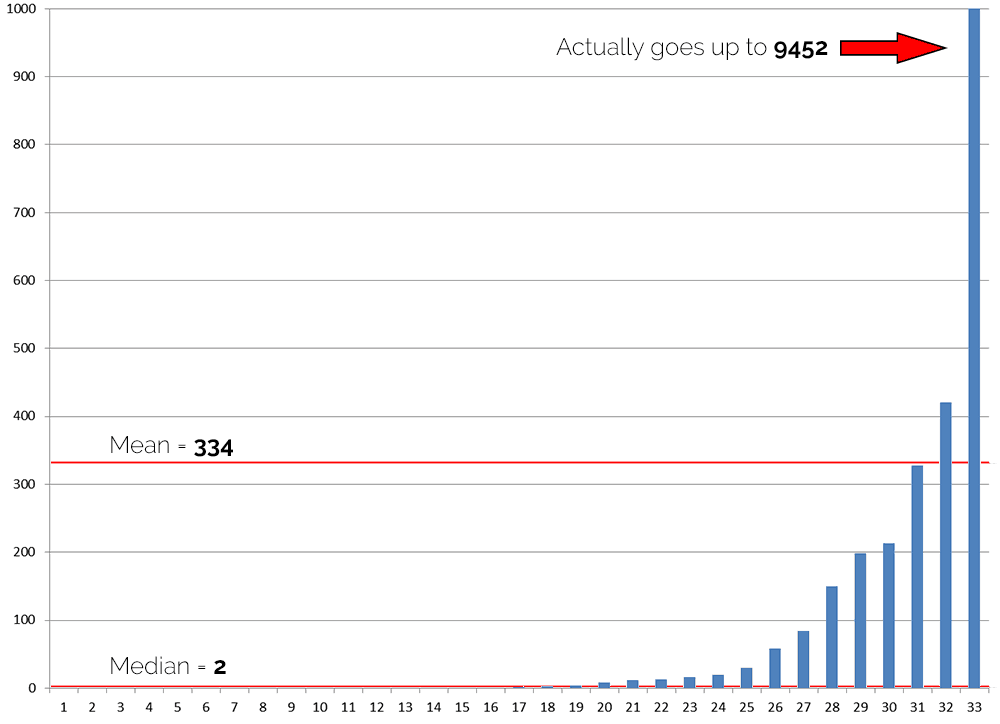

Now, there is a complicating cistron for any comparison of Yelp reviews. In a phrase: power users. While many Yelp users are very low-key, only leaving a pocket-sized handful of reviews, instead primarily using the service to view reviews left past other users. But there is a small-scale a coalition of super users who contribute a LOT of reviews to Yelp.

This isn't an issue when information technology comes to looking at an average rating score. Whether you're a super user or a novice, your ratings get equal weight (unless your review gets filtered out), and a single rating can't swing things much, because there's a maximum of 5 stars.

But when it comes to variables that don't have a maximum value, things tin get a footling crazy. For example, one business I looked at had 33 reviews. When I took a wait at how many photos each user had previous submitted to Yelp, I found that while most users had contributed zero or very few photos, i user had submitted nine,452 photos to Yelp. Await at this graph of each user'southward photograph count, it'south absurd (go on in heed that the scale of the graph maxes out at 1,000 photos):

This presents a serious problem. A unmarried person skews the average to an cool degree—only two users' photo counts exceed the mean. It's similar having the valedictorian in your math course. They completely wreck the grading curve.

With this in mind, for all other variables besides Yelp rating, I chose to use the median average. For our purposes, a median is actually useful because it gives u.s. a number where half the users in a group fell below that number, and half of the users are higher up it. The median is the proverbial C educatee, smack in the middle of the demographic.

With this in heed, the assay below relies on the mean averages of user ratings, and the median averages of photo counts, review counts, and friend counts. I also compared the per centum of visible reviews that were left by users who set up profile images, versus the number left by users who didn't practise so.

In each comparison, the first figure volition be from the visible reviews, while the second volition be from filtered reviews.

Average Yelp Rating

This wasn't nigh as exciting as I expected (and hoped) it would be.

- Restaurant: 4.one vs 4.ane

- Car Store: 4.4 vs 4.i

- Plumber: iv.4 vs 4.one

- Wear: 4.3 vs 4.ix

- Golf Course: three.2 vs three.six

In two cases, the average score for visible reviews was greater than that of filtered reviews. In one case, they were equal, and in two cases, the filtered reviews' boilerplate rating was greater than the visible review ratings.

This is the sort of fairly random distribution that you would expect if Yelp'southward algorithm didn't have the rating into account. Basically, Yelp isn't stealing your five star reviews.

Percentage of Users with Contour Images

These days, social media has a huge impact on business concern and civilization. Consequently, it has become imperative to understand who a user is in gild to take a improve understanding of their viewpoint.

With this in mind, information technology's easy to see how Yelp might be more mistrusting of an bearding user who doesn't add a profile photo, versus someone who does. And it appears the data supports this assumption.

- Eating house: 71% vs 50%

- Auto Shop: 67% vs 62%

- Plumber: 49% vs 22%

- Clothing: 88% vs 33%

- Golf Class: 88% vs lx%

In that location is a very articulate trend here. With the auto shop the difference is pretty small, but in every example visible reviews were more than probable to take profile images associated with them. Bated from the car shop, at that place was 21 indicate or greater gap between in the use of profile photos in visible reviews and subconscious reviews.

Within my information sample, the overall pct of visible versus filtered reviews with profile images was 71% versus 45%. The pretty clear takeaway from this is that the presence of a profile prototype does take an affect on review filtering.

Number of Yelp Reviews Posted

The number of reviews posted by a Yelp user does appear to significantly impact the visibility of their reviews.

- Eating place: half-dozen vs 1.5

- Automobile Shop: vii vs 1

- Plumber: 7 vs 2

- Wear: 10 vs 2

- Golf Course: 36 vs 2.5

The departure here is pretty stark. The plumbing business had the smallest gap, and fifty-fifty so the median visible reviewer had posted iii.5 times the number of reviews as the median filtered reviewer.

Looking at the raw data seems to reinforce the conclusion that review count is strongly factored into Yelp's algorithm: the v highest review counts for the 66 filtered reviews I looked at were 59, twenty, 19, 9, and 9—simply 4.v% of reviewers with more than five reviews were filtered. Once a user's review count is in the high single digits—unless they've washed something to make Yelp actually cranky—their reviews are almost guaranteed to show up.

In our personal experience, we have seen reviews which had been filtered for months or years suddenly released from purgatory without explanation. Based on the data higher up, information technology seems likely that the reviews were unfiltered when users finally posted enough reviews to make Yelp happy.

The takeaway hither is to not only encourage your customers to leave reviews for yous, merely to exercise so for other businesses as well; to be more active in reviewing their local community's businesses. Once they get past a total of about vi or vii reviews, it's very likely that all of their reviews will survive the algorithm'southward wrath.

Number of Yelp Friends

It appears that the number of Yelp friends that a user has likewise impacts the visibility of their reviews, only the correlation is a bit noisy when you lot dig deeper.

- Restaurant: xv.5 vs two

- Machine Shop: 1 vs 0

- Plumber: 0 vs 0

- Clothing: 7 vs 0

- Golf Course: 7 vs 0

In looking at the averages, there's definitely a gap. Notwithstanding, in looking at the raw data, 10 of the 66 filtered reviews were written by users with 20 or more Yelp friends, with 7 of them having more than than 35 friends. A pretty significant chunk of the filtered reviews were written by social collywobbles

It appears that while the friend count does have some impact, it's not most every bit formative every bit the other factors described above. The takeaway is that having Yelp friends helps, just can be outweighed by other factors

Number of Photos Posted

On the surface, the number of photos posted by Yelp users doesn't appear to have a profound impact on review visibility…

- Restaurant: 4.five vs 0.v

- Automobile Store: 7 vs 0

- Plumber: 0 vs 0

- Wear: 0 vs 0

- Golf Form: ii vs 0

Obviously, there are no instances in which users with filtered reviews averaged more photos than those with visible reviews. But for two businesses, the medians were both were 0, and the golf class comparison isn't terribly compelling either.

Still, the raw information tells a very interesting story: there are very, very few filtered reviews posted by users with meaning photograph counts. Of the 66 filtered reviews, the top v photo counts were 26, 21, 6, 5, and 2. That'due south a seriously drastic fall off. 94% of the filtered reviews were posted by users that had submitted ii or fewer photos to Yelp.

The takeaway here is that while a lot of Yelp users don't postal service photos, posting even a pocket-size handful of photos has a pretty good likelihood of getting a user's reviews out of purgatory.

The Concluding Analysis of Our Little Yelp Experiment

To compact the couple thousand words or so in a higher place into something short and sugariness, hither'south what I think. Outset of all, I don't see bear witness that the rating of a review has an impact on whether a review is filtered or not.

Secondly, the other factors in play all definitely have some sway on whether a review is filtered. If I were to rank these four variables in terms of importance, taking into account a user'south fourth dimension investment (information technology'd exist great if every user wrote 10 reviews, merely that takes a lot of time), this would be my ranking:

- Profile Image

- Photos Submissions

- Number of Reviews

- Number of Friends

Setting a contour image and uploading a couple photos of a business requires very little time, and the data indicates that these take a significant bear on on the likelihood of a review being visible. After that, the quantity of reviews is very important, just the magical threshold where y'all're almost guaranteed to not be filtered is fairly high—around 6 to nine reviews. The number of friends matters as well, but doesn't outweigh the factors in a higher place (and for users who aren't inclined to socialize on Yelp, it's going to be tough to convince them to do otherwise).

The purpose of this analysis was to provide some actionable communication for business owners. So, if you're managing a business and you lot want all of your reviews to evidence upwards, the data suggests that y'all don't demand to target Yelp super users. You lot just need to encourage your loyal customers to not just write a positive review, simply also to have a couple minutes to add a contour paradigm and take and submit a couple photos of your concern. And so, peradventure nudge them to get out reviews for other businesses also to get their review count upward. These little extra actions can significantly heighten the odds that their review of your business organization will testify up.

Source: https://www.postmm.com/social-media-marketing/yelp-reviews-not-recommended-data-analysis/

Belum ada Komentar untuk "Do You Have to Write a Review if You Check in on Yelp"

Posting Komentar